Charday Penn/E+ via Getty Images

(Disclaimer: before we start, I’m not a developer or a software engineer. So, if despite my efforts, there are still mistakes in this article, please let me know!)

Datadog? What?

Datadog

Datadog (NASDAQ:DDOG) is not an easy company to understand if you don’t work in software (hence the disclaimer above). I will try to dissect the company and hopefully, at the end of the article, you understand what it does and how it makes money. I also take a brief look at the earnings.

An introduction to Datadog and its history

Datadog

Datadog was founded in 2010 by Olivier Pomel and Alexis Lê-Quôc, who are still leading the company, Pomel as the CEO, Lê-Quôc as the CTO (Chief Technology Officer). The two Frenchmen are long-time friends and colleagues. They met in the Ecole Centrale in Paris, where they both got computer science degrees.

Datadog

(Olivier Pomel, from the company’s website)

Olivier Pomel is an original author of the VLC Media Player, which a lot of you will know or recognize the logo.

VLC Media Player

(The VLC Media Player Icon)

Pomel and Lê-Quôc both worked at Wireless Generation, a company that built data systems for K-12 teachers. For those who don’t know the American educational system, K-12 stands for all years between kindergarten and the 12th grade, from age 5 to 18. K-12 has three stages: elementary school (K-5), middle school (K6-8) and high school (K9-12).

Wireless Generation is now called Amplify and it offers assessments and curriculum sources for education to schools. Wireless Generation was sold to Newscorp in 2010, which was the sign for the two friends to go and found their own company. Pomel was VP of Technology for Wireless Generation and he built out his team from a handful of people to almost 100 of the best engineers in New York.

Yes, you read that right, New York. Because Pomel and Lê-Quôc knew many people in the developer scene in New York, Datadog is one of the few big tech companies not based in Silicon Valley. The company’s headquarters are still in Manhattan today, on 8th Avenue, in the New York Times building, close to Times Square and the Museum Of Modern Art.

Before Wireless Generation, Pomel also worked at IBM Research and several internet startups.

Alexis Lê-Quôc is Pomel’s co-founder, friend, and long-time colleague. He is the current CTO of Datadog.

Datadog

(Alexis, Lê-Quôc, from the company’s website)

Alexis Lê-Quôc served as the Director of Operations at Wireless Generation. He built a team as well there and a top-notch infrastructure. He also worked at IBM Research and other companies like Orange and Neomeo.

DevOps, Very Important For Datadog

He has been a long-time proponent of the DevOps movement and that’s important to understand Datadog. The DevOps movement tried to solve the problem that many developers and operators worked next to and even against each other, almost acting as enemies. DevOps focuses on how to put them together to make everything more frictionless. Developers often blamed the operational side if there was a problem (for example, the database that was not up-to-date) and operators blamed developers (a mistake in the code). By working together in teams, good communication and even as much integration between the teams as possible, DevOps tried to solve that problem.

The problem was that there was no software for a unified platform for DevOps and Datadog helped solve that problem. If you want to know where the problem is, it’s good to have a central observability platform for DevOps and Datadog set it as its task to make that.

As a tech company in New York, Datadog had quite a lot of trouble raising money initially. But once it had secured money, it started building, releasing the first product in 2012, a cloud infrastructure monitoring service, just ten years ago. It had a dashboard, alerting, and visualizations.

In 2014, Datadog expanded to include AWS, Azure, Google Cloud Platform, Red Hat OpenShift and others.

Because of the French origin of the founders, it was natural for them to think internationally from the start. The company set up a large office and R&D center in France to conquer Europe quite early in its history, in 2015 already, just three years after the launch of its first product.

Also in 2015, it acquired Mortar Data, a great acquisition. Up to then, Datadog just aggregated data from servers, databases and applications to unify the platform for application performance. That was already revolutionary at the time. Datadog already had customers like Netflix (NFLX), MercadoLibre (MELI) and Spotify (SPOT). But Mortar Data added meaningful insights to Datadog’s platform. This allowed Datadog’s customers to improve their applications constantly.

Datadog really needed this as companies like Splunk (SPLK) and New Relic (NEWR) had done or were in the process of doing the same. Datadog was seen as a competitor of New Relic at the time. To a certain extent, that is still the same today.

In 2017, Datadog did a French acquisition with Logmatic.io, which specialized in searching and visualizing logs. It made Datadog the first to have APM (application performance monitoring), infrastructure metrics and log management on a single platform.

In 2019, Datadog bought Madumbo, another French company. It’s an AI-based application testing platform. In other words, because of the self-learning capabilities, the platform becomes more and more powerful in finding weak links and reporting them without the need to write additional code. Instead, it interacts with the application in a way that is as organic as possible, through test e-mails, password testing, and many other interactions while testing everything for speed and functionality. The bot can also detect JavaScript weaknesses. The capability was immediately added to the core platform of Datadog.

Also in 2019, Datadog founded a Japanese subsidiary and in September of 2019, Datadog went public.

CNBC

(The Datadog IPO, source)

Before it had its IPO, Cisco (CSCO) tried to buy Datadog above the range of its IPO price. Pomel about how he thought about this $8B offer:

Wow this is a lot of money! But at the same time I see all this potential and everything else in front of us and there’s much more we can build

Datadog decided not to sell and on the first day that the company traded, it jumped to a valuation of almost $11B.

The name Datadog is a remarkable one. None of the founders had or particularly liked dogs. In Wireless Generation, Pomel and Lê-Quôc, named their production servers “dogs”, staging servers “cats” and so on. “Data dogs” were production databases. There were dogs to be afraid of. Pomel:

“Data Dog 17” was the horrible, horrible, Oracle database that everyone lived in fear of. Every year it had to double in size and we sacrificed goats so the database wouldn’t go down.

So it was really the name of fear and pain and so when we started the company we used Datadog 17 as a code name, and we thought we’d find something nicer later. It turns out everyone remembered Datadog so we cut the 17 so it wouldn’t sound like a MySpace handle and we had to buy the domain name, but it turned out it was a good name in the end.

What Datadog does

Datadog describes what it does as ‘Modern monitoring & security’. I could give you the explanation of what that means myself, but if founder and CEO Olivier Pomel does a really good job in explaining from a high level what Datadog does here why would I not let him do it, right?

Whenever you watch a movie online or whenever you buy something from a store, in the back end, there’s ten thousand or tens of thousands of servers and applications and various things that basically participate into completing that – either serving the video or making sure your credit cards go through with the vendor and clears everything with your bank.

What we do is actually instrument all of that, we instrument the machines, the applications, we capture all of the events – everything that’s taking place in there, all of the exhausts from those machines and applications that tell you what they’re doing, how they’re doing it, what the customers are doing.

We bring all that together and help the people who need to make sense of it understand what’s happening: is it happening for real, is it happening at the right speed, is it still happening, are we making money, who is churning over time. So we basically help the teams – whether they are in engineering, operations, product or business – to understand what these applications are doing in real time for their business.

In the old days, you had a development team that made an application and it took maybe six months before it was operational. For the next few years, that was it, no changes could be made. If the developers regretted a weakness, they had to wait for a few years, until the next upgrade.

That changed with the cloud. You could now constantly upgrade and developers can easily make changes without going through a whole administrative and technological drag of a process. If you implement a certain code and you think there is a better solution the next day, no problem. Moreover, Datadog will show you what doesn’t really work well.

Olivier Pomel gives a few examples of issues Datadog can help its customers with:

There’s a number of things our customers can’t do on their own. For example they don’t know what’s happening beyond their account on a cloud provider. One thing we do for them is we tell them when we detect an issue that is going to span across different customers on the cloud provider. We tell them “hey you’re having an issue right now on your AWS and it’s not just you. It’s very useful because otherwise they have no way to know and they see your screen will go red and they have to figure out why that is.

Other things we do is we‘re going to watch very large amounts of signals that they can’t humanly watch, so we’re going to look at millions of metrics and we’re going to tell them the ones that we know for sure are important and not behaving right now, even if they didn’t know that already, if they didn’t know “I should watch this”, “I should put an alert on that”, “I should go and figure out if this changes”. These are examples of what we do for them.

The problems that Datadog solves

Datadog helps with observability and this in turn limits downtime, controls the development and implementation, finds and fixes problems and provides insight into every detail necessary on a unified platform.

But to make it even more like real life and where Datadog can make a difference, Olivier Pomel has a good way of explaining what problem Datadog exactly solves. He talks about Wireless Generation, where he and Alexis Lê-Quôc were the head of development and operations.

I was running the development team, and he was running the operation team. We knew each other very well, we had worked together, we were very good friends. We started the teams from scratch so we hired everyone, and we had a “no jerks” policy for hiring, so we were off to a good start. Despite all that, we ended up in a situation where operations hated development, development hated operations, we were finger pointing all day.

So the starting point for Datadog was that there must be a better way for people to talk to each other. We wanted to build a system that brought the two sides of the house together, all of the data was available to both and they speak the same language and see the same reality.

It turns out, it was not just us, it was the whole industry that was going this way.

Datadog covers what it calls ‘the three pillars of observability’: metrics, traces and logs (next to other things).

A metric is something that is a data point that is measured and tracked over time. It’s used to assess, compare and track code production or performance.

Traces are everything that has to do with a program’s execution, the metadata that connects everything. When you clicked on this article, that took you from the link in your mail to here but this is being retrieved from a database. Those connections can be found in traces. Traces are often used for debugging or making the software better.

Logs are events that are being generated by any of the participants in any of the systems. There are system logs (which have to do with the operating system), application logs (which have to do with the activity in the application, the interactions), or security logs (which log access and identity).

Companies used to have several software solutions for each separately. For metrics, companies had monitoring software like Graphite. For metrics, developers needed other software, APM or application performance monitoring. This was New Relic (NEWR), for example. And then for logs, there used to be log management software like Splunk (SPLK).

These platforms didn’t talk to each other and developers or operators had to open them all separately and compare the silos manually. That didn’t make sense, of course. Problems often went across borders; therefore, it makes sense to unify everything on one platform and that’s exactly what Datadog did.

This allows observability teams to act much faster, especially because Datadog also provides the context of why something unexpected happens.

The solutions that companies use, are more and more complex, weaving together more applications, more multiple cloud hosting, more APIs, bigger or more teams working on separate projects simultaneously, edge cloud computing and so on. More than ever, there is a need for ‘the one to rule them all’ when it comes to observability, which is the fight that Datadog seems to have won.

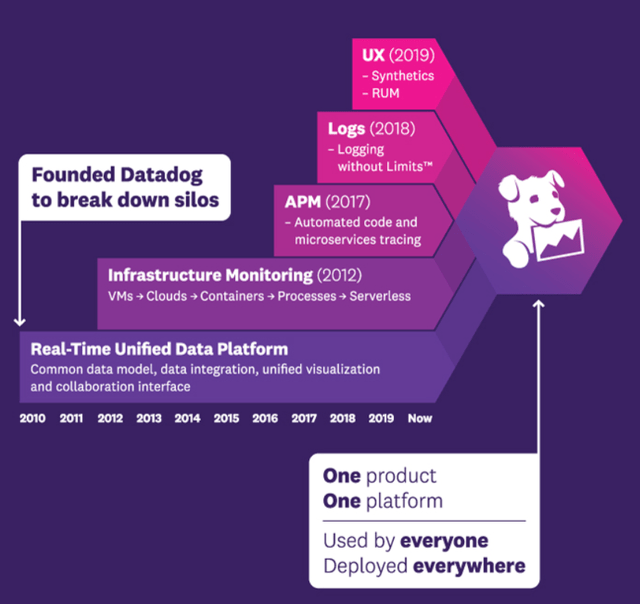

If you look at the company’s timeline, you see that initially, it only had infrastructure monitoring, so metrics. Datadog added logs and traces but other things too along the way.

Datadog’s S-1

As you can see, when Datadog added the “three pillars of observability” it didn’t rest on its laurels.

In 2019, it introduced RUM or real-user monitoring. It’s a product that allows the Datadog customer to see the interactions of real users with their products (a site, for example, or a game). Think about how many people who have downloaded a game click on the explanation of the game before playing and which mistakes they still make, how many immediately start playing, if they can find the play button fast enough, and so on. Or think about new accounts. If there is a steep drop, Datadog will flag this and engineers can investigate this. Maybe the update had a bug that doesn’t allow users to use logging in through their Apple account anymore, for example.

I’m returning to synthetics in a minute, but I first want to mention security, which is not on the roadmap above yet. As we all know, security has become much more important than just a few years ago and therefore it’s important to also integrate security people into the DevOps team and make it a DevSecOps team. Datadog has already adapted for the new DevSecOps movement.

It introduced the Datadog Cloud Security Platform in August of 2021, which means that it now offers a full-stack security context on top of Datadog’s observability capabilities. Again, just like with DevOps, the company is early in what is clearly a developing trend (pun not intended) in software, the integration of security specialists into the core team of DevOps. Datadog offers a unified platform for all three and security issues can be coupled to data across the infrastructure, the network and applications. It allows security teams to respond faster and gain much more granular insights after the breach has been solved.

Again, Datadog solves a real problem here. As more and more data move to the cloud, security teams often had less and less visibility, while the attacks become more and more sophisticated. That’s why it’s important to give back that visibility to these teams and give them a tool to implement security. Developers and operations can implement security into all levels of software, applications and infrastructure.

Datadog also added synthetics in 2019, as I already mentioned before. Synthetics are the simulation of user behavior to see if everything works as it should, even if no users are on the system yet. That was added through Datadog’s acquisition of Madumbo, as we saw earlier. Pomel about synthetics:

There is an existing category for that. It’s not necessarily super interesting on its own. It tends to be a bit commoditized and it’s a little bit high churn, but it makes sense as part of a broader platform which we offer. When you have a broader platform, the churn goes away and the fact it is commoditized can actually differentiate by having everything else on the platform.

And then Pomel adds a short but very interesting sentence:

There’s a few like that, and there’s more we’re adding.

So, you shouldn’t expect the expansion of Datadog to stop anytime soon.

How Datadog makes money

In short, Datadog makes money through a SaaS model, Software-as-a-Service. That means that customers have to pay monthly. But let’s look at how this works in more detail.

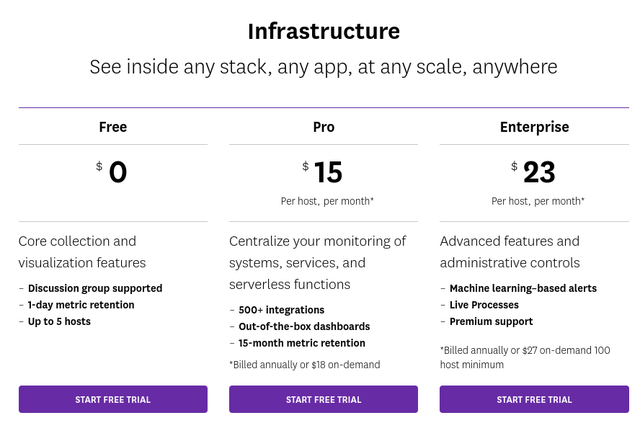

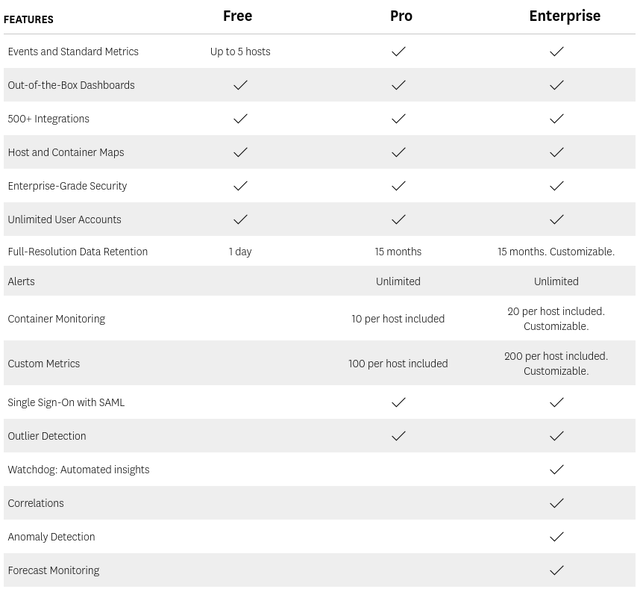

Datadog uses a land-and-expand model. It uses a free tier that is limited in volume. Basically, you can get the infrastructure observability for free if you have less than five servers. You will have to pay if you have more servers, as it makes no sense to not add certain servers.

Datadog

(Source)

This is how Datadog defines a host:

A host is any physical or virtual OS instance that you monitor with Datadog. It could be a server, VM, node (in the case of Kubernetes) or App Service Plan instance (in the case of Azure App Services).

This is what you get in the different plans:

Datadog

Datadog

(Source)

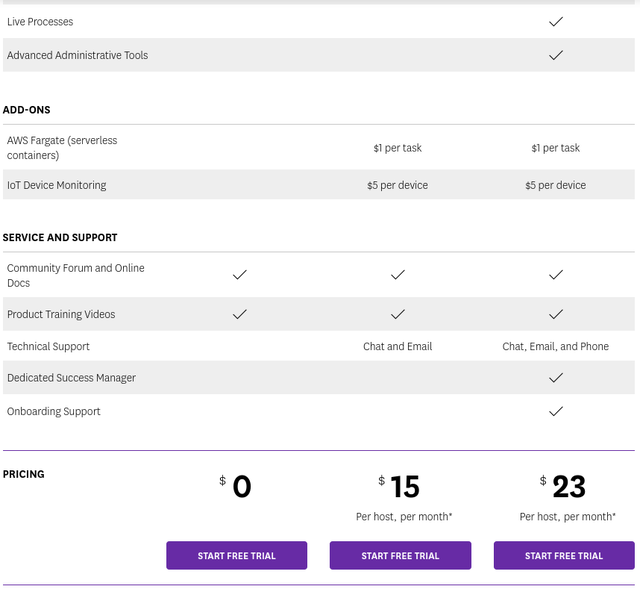

It’s important to know that this is just the infrastructure module. Datadog is a master in cross-selling and upselling existing customers and sells them several of these modules:

Datadog

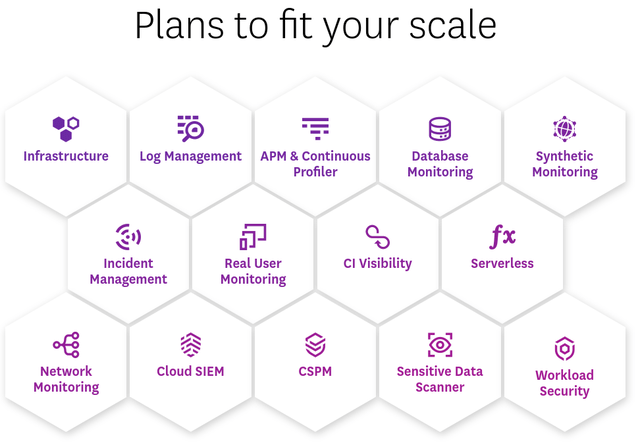

This is another example, for APM & Continuous Profiler.

Datadog

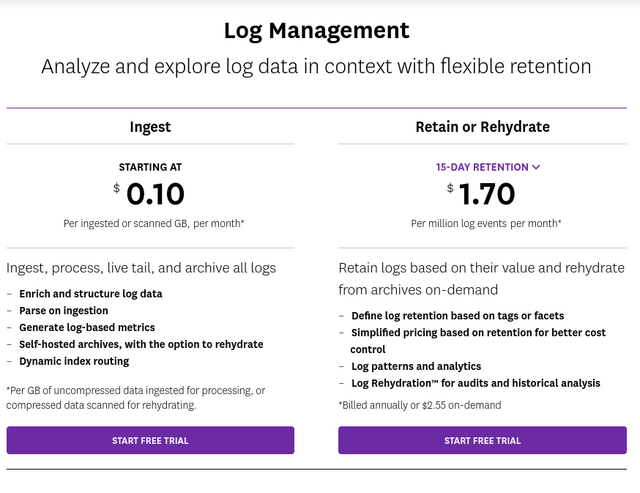

Other modules, like log management, are usage-based pricing:

Datadog

I won’t list all the pricing possibilities for all modules here. You can go to this page if you want to see them all.

Datadog’s Sales Approach

The sales approach the company takes is really aimed at developers. When I hear Olivier Pomel talk about the approach, it reminds me so much of Twilio’s founder and CEO Jeff Lawson’s approach to sales and doing business in general, summarized in the title of his book: “Ask Your Developer.” It means that the sales strategy is bottom-up: after having convinced developers, they convince their CIO or CTO and then the big contracts are made.

For large enterprises, Datadog works a bit differently, but not that much. They first talk to the CIO and they let their teams test the software (with the free tier) to get feedback. After a certain time, Datadog comes back and it often results in an order form that is being signed.

Olivier Pomel about this approach:

Small company or large company – the product is adopted the same way. The users in the end are very similar. When you’re a developer at a very large enterprise you don’t think of yourself differently as a developer at a start-up or smaller company. There’s more and more communities between those.

There are four types of sales teams in Datadog. The enterprise sales team obviously sells to large companies, the customer success sales team takes care of the onboarding and cross-selling to existing customers. The partner team works with reseller, referral partners, system integrators and other outside sellers. The inside sales team is the team that focusses on bringing in new customers.

As you may guess, there is a lot of training for the salespeople, so they stay on top of their industry. They also have to translate a customer’s problems to one or several product offerings.

Affordability is important to Datadog. Founder and CEO Olivier Pomel:

In terms of pricing philosophy though, we had to be fair in what we wanted to achieve with the price. And the number one objective for us was to be deployed as widely as possible precisely so we could bring all those different streams of data and different teams together. I wanted to make sure we were in a situation where customers were not deploying us in one place and then forgetting the rest because it can’t afford it.

Pomel also gives an interesting insight into how the company decided on its pricing for the company’s customers:

We looked at the overall share, what it would get, how much they would pay for their infrastructure, we decided which fraction of that we thought they could afford for us , then we divided that by the salary and infrastructure so we could actually get a price that scales.

Now the most important thing about pricing as we’ve been scaling it – and customers send us more and more data – is to make sure that customers have the control and they can align what they pay with the value of what they get.

This customer-centricity of the pricing model is an important point of differentiation.

The Earnings: What I Pay Attention To

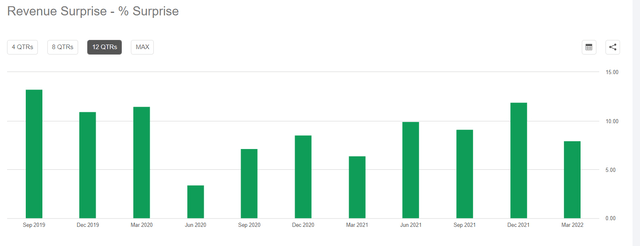

Datadog is to report its earnings on Thursday. Very important to me is revenue growth. The consensus estimate for revenue is $381.28M, up 63.25% YoY. But Datadog has beaten the consensus in every single quarter since it became a public company.

Seeking Alpha Premium

The consensus for EPS (on an adjusted base) stands at $0.15 but in the previous quarter, Datadog blew away the estimates too. The consensus was $0.13 but the company brought in $0.24.

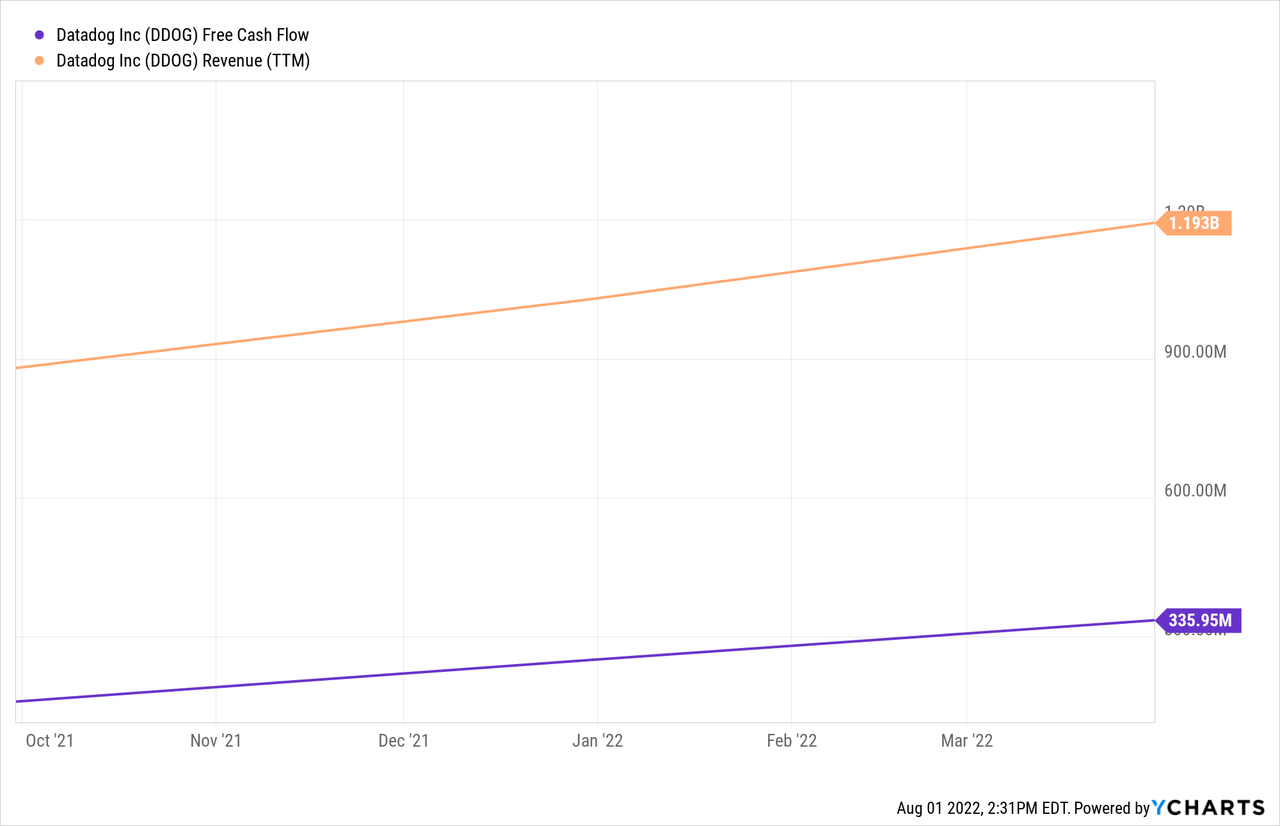

When you look at free-cash flow margins, you see that Datadog is very profitable. This is revenue and free cash flow.

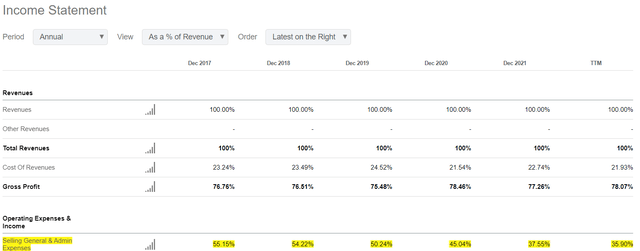

$335.95M on total revenue of $1.193B means there is an FCF margin of 28%. But that still improves. In the previous quarter, Q1 2022, the company had an FCF margin of almost 36%, very impressive. Especially if you look at how little the company invests in sales, compared to other high-growth companies. And SG&A (sales, general and administrative costs) continues to go down as a percentage of revenue, despite the very high revenue growth of what could be 70%.

Seeking Alpha Premium

Of course, with a forward PS of 20 and a forward PE of 134, the stock is not cheap. But if you are a long-term investor, and for me that means at least three years, preferably longer, I think Datadog is a very exciting stock to own and well worth the premium, especially if you look at the high free cash flow. Let’s see if the company keeps executing when it announces its the earnings on Thursday.

Some of you might wonder if they should buy before earnings. I’m not a market timer but I invest for the long term. Every two weeks, I add money to my portfolio and I often scale into positions over years. So, investing for me is a constant process, not a point in time. For me, the best situation is that Datadog has great earnings but the stock drops anyway for a small detail that doesn’t really matter. In that case, I would definitely add a bit more than usual.

In the meantime, keep growing!

Be the first to comment