Justin Sullivan

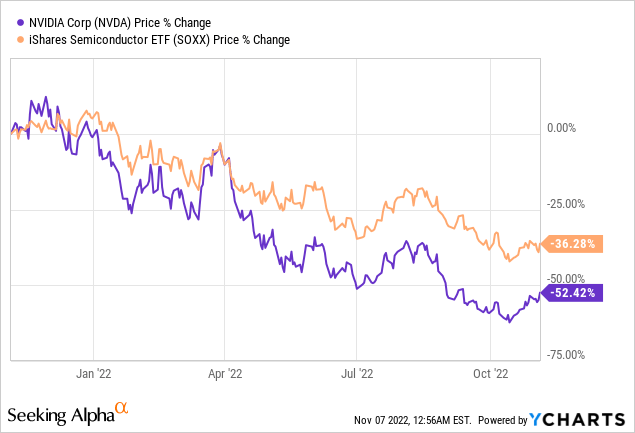

With U.S. export restrictions forbidding NVIDIA (NASDAQ:NVDA) to export some of its most advanced GPU chips like the A100 and the H100 chips to China, the company could lose $400 million. As a result of this loss and also because of the gloom encapsulating the semiconductor industry because of demand concerns, the stock is down by more than 50% in the last year, as shown in the blue chart below. In the meantime, the iShares Semiconductor ETF (SOXX) which dedicates 7.65% of its assets to Nvidia’s shares has suffered less.

Now, there has some optimism around the company’s gaming business with its new RTX 40 series processors, but, let us be realistic as you cannot expect people to continue playing more games than they used to play during the Covid lockdowns.

Instead, the objective of this thesis is to explore the additional cloud AI (artificial intelligence) sales opportunities enabled by Nvidia’s GPU processors, but also bearing in mind competition from Advanced Micro Devices (AMD) and Intel (INTC), SOXX can be viewed as an optional investment.

First, it is important to make the connection between AI and graphics processing units or GPUs, and for this purpose, I take inspiration from Nvidia’s CEO presentation seven months back.

The Relation between AI and GPUs

The AI company turned a humble graphics card into the H100 GPU processor so powerful that it can now serve as a strategic advantage, not only for the U.S. military but also economically for America’s public cloud giants (hyperscalers) like Microsoft (MSFT) as I recently explained in an article.

H100 GPU (www.nvidia.com)

In this case, the difference between a CPU or central processing unit as supplied by Intel and which is required by default in all PCs or servers is that the GPU as its name implies is graphics-intensive, with Nvidia’s technology enabling more computations to be performed at the same time, or in parallel. This means faster processing with gaming application developers preferring Nvidia’s GPUs to Intel’s CPUs.

Exploring further, GPUs are also ideal for low latency (high-speed) 3D applications spanning every industry, including financials, life sciences, and logistics companies. One example is Amazon (AMZN) using Nvidia’s chips for designing a digital twin (real-time replica) of its supply chain.

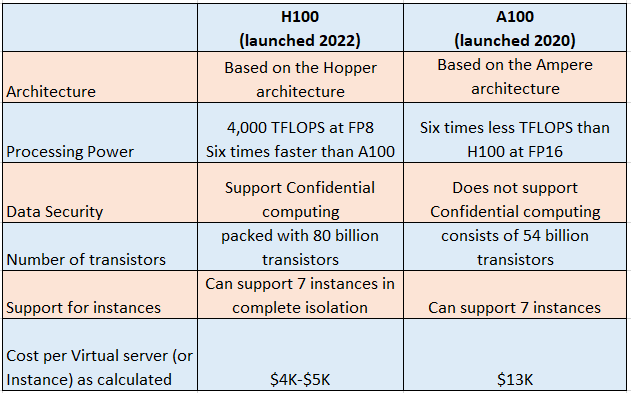

However, AI remains expensive, but with the public cloud model, it has become more affordable as companies no longer need to buy dedicated servers but can instead rent GPU time on a monthly, weekly, or even hourly basis from hyperscalers. Acting as an enabler, the H100 can be portioned into 7 instances, a feat which could also be achieved by the previous generation A100 (table below) but at the expense of per-instance isolation and IO virtualization. Without going into too many details, this means that each H100 can host 7 independent cloud tenants compared to only one for the A100.

This in turn translates into more flexible cost dynamics for IaaS (Infrastructure-as-a-Service) providers, whereby they can obtain more cloud revenue by renting the same physical H100 GPU to more customers. Now, this is a nice development, but it is also important to value opportunities in order to provide actionable insights for investors.

Valuing the Opportunities

According to Tom’s Hardware, one A100 costs around $13K, while it is $33K for an H100 with the same configuration. However, since the Hopper can support 7 tenants while at the same time providing them with complete isolation, it comes to only 33/7 or about $4.7K per tenant. This is just an estimate as there are other factors like memory and networking to consider, but the bottom line is that the Hopper translates directly into better return on investments for cloud providers.

Table built using data from (www.nvidia.com)

On top, for security purposes, the H100 supports confidential computing for protecting data that is in use. Now, there are encryption and other protection mechanisms which protect data which is stored in a database or in transit, but there was none for protecting data being processed by AI algorithms, till the advent of H100 for GPU-based tasks.

Therefore, Nvidia is well suited to take on the global cloud AI market, which is forecasted to increase by $10.22 billion between 2021 and 2026. However, adopting some caution, the 20.26% YoY growth expected for 2022 may not be achieved due to supply chain issues. For this matter, the cloud AI GPUs supplied by Nvidia were also impacted by supply disruptions, but, the company still managed to increase its data center segment sales by 61% in the second quarter of 2022 (ending in July) to $3.81 billion compared to 2021, with revenues from hyperscalers doubling in the period.

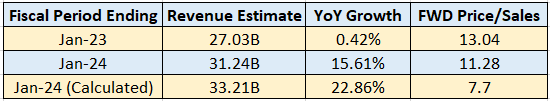

Thus, with an H100 offering better ROI, the Compute & Networking segment’s (which includes Data Center platforms and systems for AI, HPC, and accelerated computing) revenue, which constituted 41% of overall revenues for fiscal 2022 (ending January this year) with $15.87 billion could be boosted up. I estimate that the segment could generate $33.21 billion (15.87 x 1.61 x 1.3) in 2024, assuming 61% and 30% growth in fiscal 2023 and 2024, respectively. This would constitute an increase of nearly $2 billion over the $31.24 billion for fiscal 2024 estimated by analysts as shown in the table below.

Table Built using data from (www.seekingalpha.com)

This would in turn constitute a growth of 22.86% growth, or 7% more than the 15.61% predicted by analysts, which brings down the forward price-to-sales multiple from 11.28x to 7.7x ((11.28 x 15.61)/22.86)). Assumptions made are that gaming revenues would remain constant while shortfalls from China are offset by the Hopper being adopted by Dell (DELL), HPE (HPE), Inspur, and Supermicro as well as other Database-as-a-Service providers like Oracle (ORCL) as cloud competition evolves more to intelligent processing.

The Competition and the Rationale for SOXX

Achieving such a target will also depend on the supply chain being completely restored, but there is competition. Here, the company competes with AMD’s MI100 and MI200 chips. Thus, while Nvidia should profit more with its H100, AMD should also profit from the shortage of GPUs currently impacting the industry. Looking at applications, use cases vary for analyzing data from vehicles fleet in order to train AI models, building high-definition maps and recommender systems where data has to be analyzed from various social media sources in order to recommend the products that have the best chance of being bought to customers.

Investors can view these two cases as using AI for decision-making instead of relying on manual data inputs (from human beings) which is not only time-consuming but is also costly in current high-inflation times.

Looking further, Intel also has AI accelerator cards specially built for data preprocessing, training, inferencing, and deployment. Furthermore, in addition to producing more advanced CPUs, has also started to produce graphics chips called GPU Flex for cloud gaming purposes.

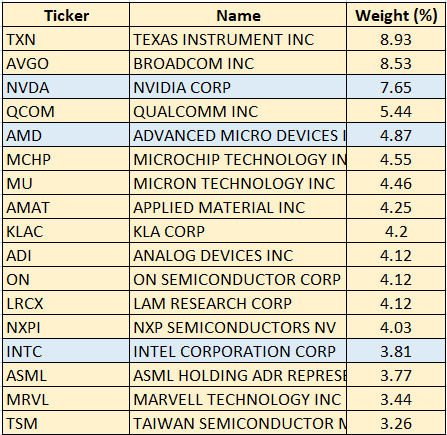

These are the reasons why another option would be to invest in SOXX, which has a 16.33% combined exposure to Nvidia, Intel, and AMD. In addition, as I elaborated previously, the iShares offers better exposure to chip equipment makers, who should benefit the most from the CHIPS Act.

Table Built using data from (www.ishares.com)

This is the reason I prefer SOXX instead of the VanEck Semiconductor ETF (SMH) despite the latter’s cheaper fees as shown below in the comparison table below.

Concluding With A Dose Of Caution

Therefore, this thesis has made the case for investment in Nvidia based on the fact that some of the innovations in its latest H100 processor can bring $2 billion, thereby offsetting the $400 million revenue shortfalls from Chinese hyperscalers. In this connection, based on the P/S of 7.7x, I obtain a target of $207.4 ((141.56 x 11.28) /7.7)) based on the current share price of $141.56. The company also has a superior profitability score of A+.

However, this is for the long term, and while the company’s technology remains superior, there are still supply chain issues, despite some market optimism after news about China relaxing its Covid-Zero policy. Along the same lines, an escalation of geopolitical tensions around Taiwan can be volatile to Nvidia’s stock, which depends on Taiwan Semiconductor Manufacturing Company (TSM) for manufacturing its cutting-edge chips. Thus, the company’s stock is not immune to further fluctuations.

Now, with several new industry verticals consuming AI services through the cloud instead of having to previously spend a lot of money building expensive on-premise infrastructures, it is also important to consider competitors AMD and Intel. In this respect, a judicious way to profit from cloud AI is through SOXX, which has suffered a 35.57% downside in the last year.

Table Built using data from (www.seekingalpha.com)

Thus, with a 20% upside, the ETF could go above the $395 (329 x 1.2) level, but it is important to be prudent as the Fed Reserve hiking interest rates aggressively risk landing the economy into a recession, thus impacting demand. Also, semiconductor companies form part of growth stocks and may be adversely impacted by the rotation from growth to value.

In these circumstances, invest only if you have a long time horizon, and given that volatility should persist, I have a neutral position on Nvidia and SOXX for the short-to-medium term.

Finally, at the forefront of GPU-based AI developments and with an ability to execute profitably, Nvidia can simply not be ignored as cloud competition intensifies, with the void created by the likes of Chinese hyperscalers like Tencent (OTCPK:TCEHY) being denied of semiconductors likely to be filled by other providers in America and Europe.

Be the first to comment